Performance of the website is one of the key to success of the website and specially with growing users traffic on the website make it vital part. In recent years, demand for wordpress platform grows rapidly but there are hardly optimized servers for the best performance and security possible when you expect thousands of users on your website. One of our client(news corp) was looking for performance improvements for their kidspot.com.auand consulted us for the best possible solution.

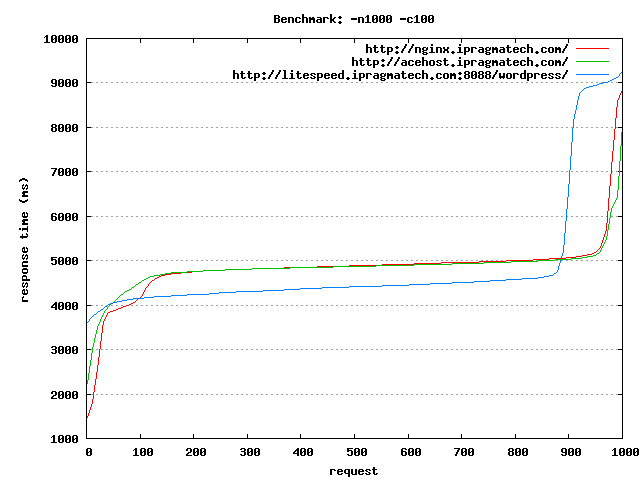

There is a huge number of open source and commercials web servers out there. Apache is the most common web servers in use today but performance of the server on high load is pathetic with default php module. We investigated lots of web servers and finalize some of them for our final decision. We picked up open litespeed, nginx and apache+nginx(Combo) for the performance load testing and sharing the results in this blog.

Testing Environment:

Inspite of testing hello word or some static files, we have used the the latest wordpress and imported test data have real-time experience. We have used the VPS serverswith following configurations:

- Intel Xeon E5-2620 (4 vcpu)

- 4GB RAM

- Apache Benchmark Tool

- 100 concurrent connections for 1000 requests

Performance Optimization:

We have optimized each of the server using our in-house high performance tweaks .On all servers, php platform (php-fpm), caching(APC) and database were fine tuned for high performance and availability. We have installed nginx on http://nginx.pragmaapps.com, open litespeed on http://litespeed.pragmaapps.com and nginx+apache(combo) on http://acehost.pragmaapps.com (We used nginx as reverse proxy and used apache+php-fpm+fastcgi to process php file).

Conclusion:

As per our benchmark nginx+apache performs nearly 15% better than the open litespeed and nearly 10% better than nginx. We think with the optimization on php platform and fine tuning apache resulted in better performance. Performance of the open litespeed seems better when there is less load on the site but once the load increases the performance degraded. The nginx+apache(combo) wins the race on higher user load and hence we have chosen it to provide our optimized wordpress hosting in collaboration with Acehost. These results may vary based on hardware configurations on dedicated or vps plans.

Apache Benchmark Testing DataServer Software: apache–nginx

Server Hostname: acehost.pragmaapps.com

Server Port: 80

Document Path: /

Document Length: 56833 bytes

Concurrency Level: 100

Time taken for tests: 48.881 seconds

Complete requests : 1000

Failed requests: 8

(Connect: 0, Receive: 0, Length: 8, Exceptions: 0)

Write errors: 0

Non-2xx responses: 1

Total transferred: 57126998 bytes

HTML transferred: 56550211 bytes

Requests per second: 20.46 [#/sec] (mean)

Time per request: 4888.051 [ms] (mean)

Time per request: 48.881 [ms] (mean, across all concurrent requests)

Transfer rate: 1141.32 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 8 9 2.2 8 35

Processing: 2233 4820 451.5 4862 8046

Waiting: 2216 4803 451.0 4845 8029

Total: 2242 4829 451.6 4871 8057

Percentage of the requests served within a certain time (ms)

50% 4871

66% 4920

75% 4951

80% 4973

90% 5033

95% 5128

98% 6309

99% 6455

100% 8057 (longest request)

Server Software: nginx/1.4.3

Server Hostname: nginx.pragmaapps.com

Server Port: 80

Document Path: /

Document Length: 59881 bytes

Concurrency Level: 100

Time taken for tests: 49.159 seconds

Complete requests: 1000

Failed requests: 0

Write errors: 0

Total transferred: 60301000 bytes

HTML transferred: 59881000 bytes

Requests per second: 20.34 [#/sec] (mean)

Time per request: 4915.853 [ms] (mean)

Time per request: 49.159 [ms] (mean, across all concurrent requests)

Transfer rate: 1197.91 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.8 0 4

Processing: 1481 4833 764.6 4884 8839

Waiting: 1061 2645 590.8 2609 6523

Total: 1481 4834 764.7 4884 8843

Percentage of the requests served within a certain time (ms)

50% 4884

66% 4947

75% 4979

80% 4999

90% 5072

95% 5181

98% 7335

99% 8624

100% 8843 (longest request)

Server Software: LiteSpeed

Server Hostname: litespeed.pragmaapps.com

Server Port: 8088

Document Path: /wordpress/

Document Length: 59604 bytes

Concurrency Level: 100

Time taken for tests: 48.549 seconds

Complete requests: 1000

Failed requests: 0

Write errors: 0

Total transferred: 60003000 bytes

HTML transferred: 59604000 bytes

Requests per second: 20.60 [#/sec] (mean)

Time per request: 4854.911 [ms] (mean)

Time per request: 48.549 [ms] (mean, across all concurrent requests)

Transfer rate: 1206.96 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 1 2.2 0 9

Processing: 3604 4823 1388.4 4414 9243

Waiting: 2212 3014 1382.2 2590 7330

Total: 3604 4824 1390.5 4414 9252

Percentage of the requests served within a certain time (ms)

50% 4414

66% 4488

75% 4543

80% 4578

90% 8705

95% 8948

98% 9054

99% 9118

100% 9252 (longest request)