We have been working with a client who has a website based on SocialEngine with fairly heavy user traffic and lots of data. As a popular website and data changing on daily basis, we have to take data backup every day just in case something happens and we need to restore it. Storing the backup on a local server needs more space which is a costlier approach in terms of hardware. So we were exploring the other options to store the data securely, efficiently and in relatively cheaper.

We researched and found that Amazon S3 option is the best for our requirements. The costing for S3 is much cheaper than storing in the SSD hard drive and won’t require any maintenance. We didn’t find any third party plugin who can handle our requirements to take the backup of our website. There were few backup plugins but failed to take backup properly.

Amazon S3 saves the data as secure object storage. It lets you preserve, retrieve and restore every version of every object in an Amazon S3 bucket. So, you can easily recover if something is accidentally deleted by users or applications failure. With Amazon S3 you only pay for the storage you actually use. There is no minimum fee and no setup cost. So we decided to use this option to store data for the website.

The best thing about using s3 is that AWS provides S3 utility which can take care of storing the data in the relevant bucket. You just need to run the command in the shell script and data is stored in S3 bucket without any hassle. So we are sharing the script on Github so that you can directly use it for your SocialEngine website.

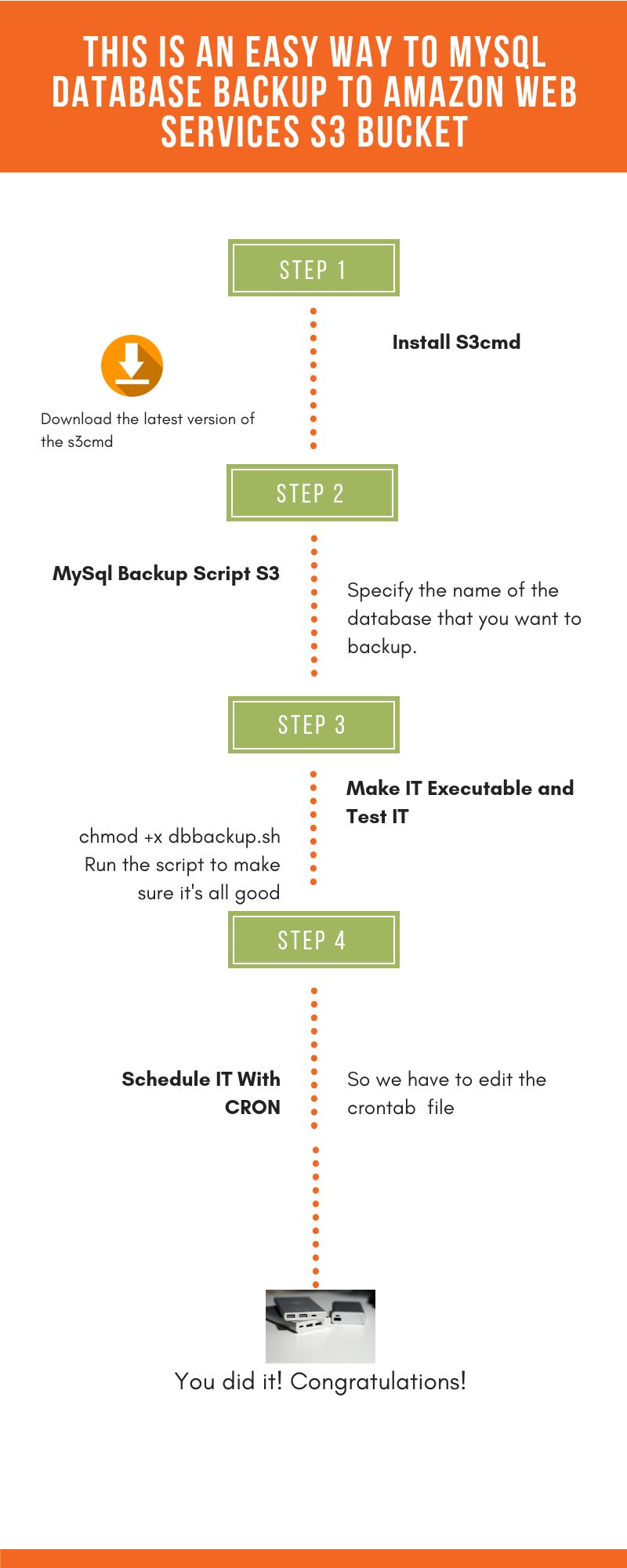

Steps to take back up of website based on SocialEngine

We are going to go through the steps in details to take the backup of the database. If you would like then you can skip the next steps and directly download the script for your website though we would like you to read the full article.

Here is the checklist for your server:

- S3cmd command line configures on the server.

- A bucket over S3 to store dump file (click to create S3 bucket).

- Make Bash Script i.e Contains MySQL Credential ( Hostname, Username, Password, DBName ), Location on your server where you want to store dump (PATH), Log Path.

- Give chmod +x on mysql backup Script (mysql_script.sh).

- Test it and check S3 bucket

- Schedule backup MySQL database with crontab as per your requirement.

Step 1. Install S3cmd:

Download the latest version of the s3cmd using this link ‘https://sourceforge.net/projects/s3tools/?source=typ_redirect‘

sudo apt-get -y install python-setuptools wget http://netix.dl.sourceforge.net/project/s3tools/s3cmd/1.6.0/s3cmd-1.6.0.tar.gz tar xvfz s3cmd-1.6.0.tar.gz cd s3cmd-1.6.0 sudo python setup.py install

In which You will be asked for the two keys (Access key and Secret key are your identifiers for Amazon S3.) – copy and paste them from your confirmation email or from your Amazon account page.

They are case sensitive and must be entered accurately or you’ll keep getting errors about invalid signatures or similar.

You can optionally enter a GPG encryption key that will be used for encrypting your files before sending them to Amazon. Using GPG encryption will protect your data against reading by Amazon staff or anyone who may get access to your them while they’re stored at Amazon S3.

Other advanced settings can be changed (if needed) by editing the config file manually. Some of the settings contain the default values for s3cmd to use.

The following is an example of an s3cmd config file: (.s3cfg).

[default] access_key = <your key> access_token = add_encoding_exts = add_headers = bucket_location = Mumbai ca_certs_file = cache_file = check_ssl_certificate = True check_ssl_hostname = True cloudfront_host = cloudfront.amazonaws.com default_mime_type = binary/octet-stream delay_updates = False delete_after = False delete_after_fetch = False delete_removed = False dry_run = False enable_multipart = True encoding = UTF-8 encrypt = False expiry_date = expiry_days = expiry_prefix = follow_symlinks = False force = False get_continue = False gpg_command = /usr/bin/gpg gpg_decrypt = %(gpg_command)s -d --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)s gpg_encrypt = %(gpg_command)s -c --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)s gpg_passphrase = guess_mime_type = True host_base = s3.amazonaws.com host_bucket = %(bucket)s.s3.amazonaws.com human_readable_sizes = False etc.............

Step 2. MySql Database Backup Script S3:

The shell script used to back up your database and upload it to S3.

The idea is to create the following script and run it with the appropriate environment variables, and what it does is actually pretty simple, and then uses mysqldump to dump the database to a temporary file, and It uploads the file to S3 by using the AWS CLI Tools for S3.

#Save dbbacku.sh #!/bin/bash ## Specify the name of the database that you want to backup # Database credentials USER="DB-USER" PASSWORD="PASSWORD" HOST="DB-host-name" DB_NAME="Database-name" #Backup_Directory_Locations BACKUPROOT="/tmp/backups" TSTAMP=$(date +"%d-%b-%Y-%H-%M-%S") S3BUCKET="s3://s3bucket" #LOG_ROOT="logs/dump.log" #mysqldump -h <HOST> -u <USER> --database <DB_NAME> -p"password" > $BACKUPROOT/$DB_NAME-$TSTAMP.sql #or mysqldump -h$HOST -u$USER $DB_NAME -p$PASSWORD | gzip -9 > $BACKUPROOT/$DB_NAME-$TSTAMP.sql.gz if [ $? -ne 0 ] then mkdir /tmp/$TSTAMP s3cmd put -r /tmp/$TSTAMP $S3BUCKET/ s3cmd sync -r $BACKUPROOT/ $S3BUCKET/$TSTAMP/ rm -rf $BACKUPROOT/* else s3cmd sync -r $BACKUPROOT/ $S3BUCKET/$TSTAMP/ rm -rf $BACKUPROOT/* fi

Step 3. Let’s run the script now:

Make can be called in various ways. For example:

# chmod +x dbbackup.sh # Run the script to make sure it's all good # bash dbbackup.sh

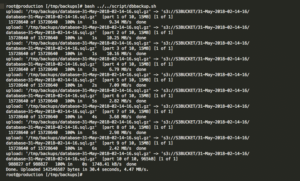

Backup script Output:-

Step 4. Schedule IT With CRONTAB:

Assuming the backup script is stored in /opt/scripts directory we need to add a crontab task to run it automatically on weekly basis:

So we have to edit the crontab file #vim /etc/crontab #Add the following lines: #Run the database backup script on every week at 12.00 0 0 * * 0 bash /opt/scripts/mysqlbackupS3.sh to >/dev/null 2>&1

Conclusion

In this article, we have explained how to automate the backup process MySQL directly on AWS S3 Bucket. Just schedule and configure the script on you MySql server and you are done. This service runs the process on regular basis according to your pre-configured schedule. Feel free to ask any query, glad to hear from you.